Beautiful Cloud and Automated Day Trading Experiment

The Beginning

Lyrics: “Last Christmas I gave you my heart, But the very next day you gave it away”.

It was actually last, last Christmas, while the great white north was cold, and I was sitting at home, warm and cozy, started to think whether there was a way to generate some constant small income daily from the stock market. As I am no stock expert, and can be very emotional seeing my stocks going up and down, plus a full time job every day, I wondered if it was possible to write an automated day trading program that was 100% hands-free, 100% emotion-free, and generates small amount of money daily to supplement my income.

With this idea, I started Googling on the Internet. Obviously, no sane men would reveal their successful day trading secret sauce because once revealed and used by many, it probably would become useless in no time. Failed to find a shortcut, I started my own journey.

The Hypothesis

With limited knowledge, I could only base my idea on the hope that:

- Past behavior repeats itself

- I could make a profit if there were more winning days than losing days

Selection of Stock Brokerage

I used the following criteria to select the stock brokerage:

- Has an API that I could use, through which I could programmatically make fully automated and unsupervised trades

- Has low foreign exchange spread when I needed to convert Canadian Dollars to US Dollars, as I would trade US stock which had larger volumes meaning better liquidity

- Has low trading commissions, as I supposed my program would trade multiple times a day. Lower commissions meant less cost and more profit

Based on those criteria, I selected Interactive Brokers Canada as the brokerage

Selection of Stocks to Trade

I used the following criteria to select the stocks to trade:

- Has huge liquidity, meaning my program could quickly buy and sell without difficulty

- Has high stock price, because Interactive Brokers charges trading commission based on number of shares traded. The higher the stock price, the fewer shares that my program would buy because the principal used to do the trade is fixed. With fewer shares, the commission would be lower, which reduces the trading cost and increases profit

- Has small bid/ask spread at most of the times. Larger bid/ask spread increases the chances that my trade would lose money

Based on the criteria, I selected SPY, QQQ and MSFT.

Gathering Stock Data

Interactive Broker provides an Trading API. I used its TWS API to gather stock market data. To get real time stock market data, I signed up with their market data subscription and started to record every piece of stock price data I received, between 9:30AM EST and 4:00PM EST, when the market was open. As the stocks I picked were highly liquid, there were multiple prices received every second. The simple Python code for demo purpose can be found here

After I collected 40 days worth of data, I started backtesting and searching for probabilities

Parameter Search Space

My idea was to find consequtive stock price rises or drops to detect a momentum and then long or short the position. If the momentum continues then I could potentially make some money. Long story short, here are the parameters that I chose to search in order to find the “optimal” combination of parameters:

- Interval - how many seconds of price data would be grouped as a window

- Up Count - how many consecutive price rising windows

- Up Gap - how much the stock price should rise between adjacent windows

- Down Count - how many consecutive price drop windows

- Down Gap - how much the stock price should drop between adjacent windows

- Loss limit - when to cut loss if a single trade position’s loss is too much

- Daily Loss Limit - when to stop trading for the day if the accumulated daily loss is too much

- Profit Limit - when to realize gains for a single trade position

- Profit Watermark Percentage - for a single trade position, if the current profit drops below a certain percentage of highest possible profit achieved, close the position

- Daily Profit Limit - when to call it a day if enough profit has been realized

- Spread limit - when not to trade if the spread between ask/bid is too much

There were a lot of parameters to be considered and each of the parameters needed to be searched, especially, when I had no idea what was the optimal search space, I had to cast a very wide net. To help understand how big the potential search space was, the following table can give an idea:

| Parameter | Example | Total # of possibilities |

|---|---|---|

| Interval | 5, 10, 15, 30, 60, 90, …, 600 | 23 |

| Up Count | 1, 2, …, 20 | 20 |

| Up Gap | 1, 2, …, 60 | 60 |

| Down Count | 1, 2, …, 20 | 20 |

| Down Gap | 1, 2, …, 60 | 60 |

| Loss Limit | 100, 200, … , 3000 | 30 |

| Daily Loss Limit | 100, 200, … , 3000 | 30 |

| Profit Limit | 100, 200, … , 3000 | 30 |

| Profit Watermark Percentage | 9, 19, …, 99 | 10 |

| Daily Profit Limit | 100, 200, … , 3000 | 30 |

| Spread Limit | 1, 2, …, 10 | 10 |

To search for the above parameter space on 40 days’ of stock data for one single stock, it would require 107,308,800,000,000,000 rounds of simuliated trading days. I don’t even know how to translate this number to English, so I asked ChatGPT and it gave me:

One hundred seven quadrillion, three hundred eight trillion, eight hundred billion

Writing and Optimizating the Simulating/Backtesting Program

Obviously no human being I know of could search this huge parameter space by hand in their lifetime. So I decided to ask my computers to do it. Initially I wrote a single threaded Python program to run the test, and shortly after I started running the program, I found it is too slow. So I put in some extra effort to make it a multithreaded Python program to fully utilize the multiple CPU cores on my computer, much better but still too slow.

I love to optimize programs, so I turned to Go. After learning some Go basics and Googling, I converted my Python code to Go. What a day and night difference! I didn’t actually measure the difference, but if I had to guess, I wouldn’t be surprised if the Go version was 50-100 times faster than the Python version. I have to concur that multi-threading in Go is super easy, much less complex than multi-threading in Java.

But still, even with the help from Go, it is still too slow to be practical. Although I have a number of computers at home, I did not want to scale-out and make it a distributed computing problem, too much work. So the only option I had was to scale-up. I looked around, AMD Ryzen Threadripper 64-core CPU caught my eye. With this CPU, I could run my backtesting 64 times faster. But, the catch was that this thing was expensive. If I recall, at that time the price of that CPU alone was maybe 5K to 7K CAD. Throwing in motherboard, memory, case etc, plus the electricity it would consume, I guess the initial investment for the experiment would be too much if I had to go down this path, especially who knows my program would actually make me money in the end or not, right?

Then I turned to the Cloud for help.

Beautiful Cloud

The beautify of the Cloud Computing is that I did not have to invest heavily at the begining to buy hardware for an experiment. I could simply go to a Cloud provider to spin up a virtual machine, run my experiment, turn it off when the run is complete, and only pay for the time of use.

First I chose AWS, the reason was simple, I had $200 unused credit in my personal AWS account. Since I was not running mission critical apps, to save money, I could use spot instance which had huge discounts compared to their on-demand pricing. I remember I chose the Graviton based instance with the most CPU cores (total 64 cores) at that time, with satisfactory speed and result.

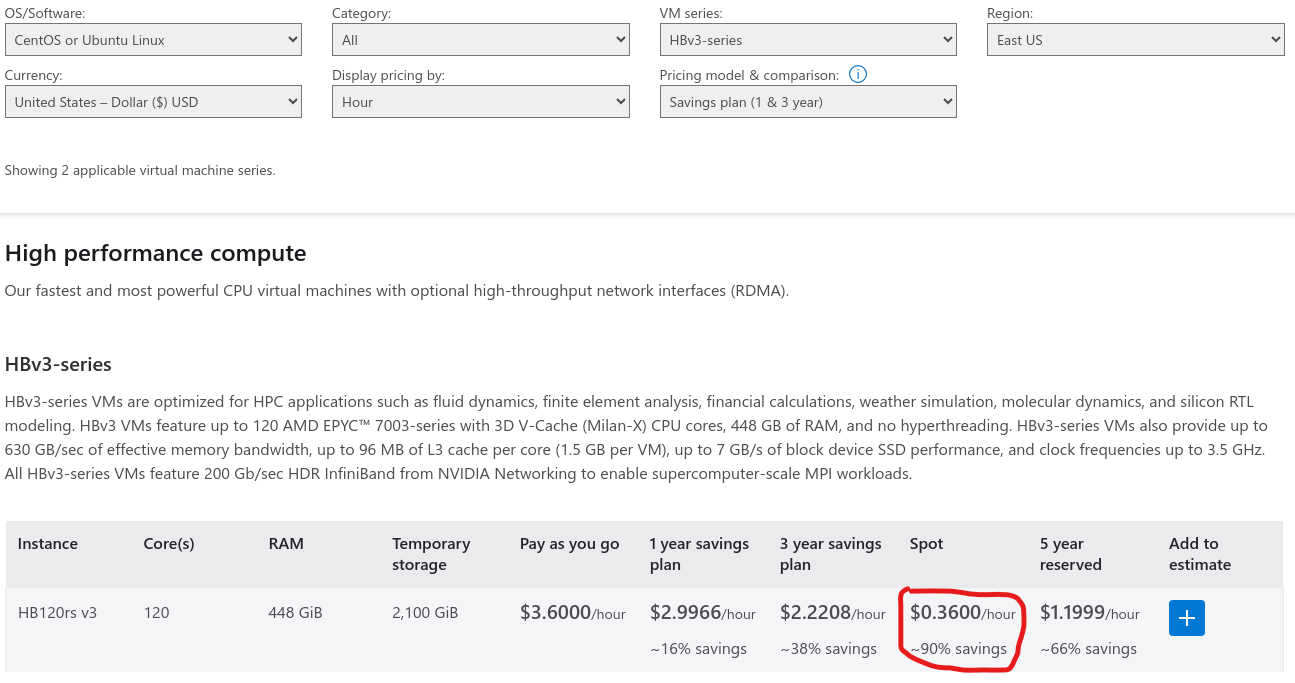

Once I used up all my credits in my personal AWS account, I signed up an Azure account, and received a couple hundred credits to start. To my surprise, I was able to find a dirt cheap Azure spot instance which has much better bang for the buck. This is one of a kind spot instance, and I don’t know why it existed, and still exists today. As of today (Nov 3, 2023), the 120 CPU core Azure VM with 448 GB of RAM, HBv3-series, only costs $0.36 USD/hour, see the screenshot below:

With the free credits and some of extra money I paid, I was able to fully execute my experiment without breaking my bank account.

The Conclusion and Failed Experiment

“All good things come to an end”, right? Although I successfully used the Cloud to execute my experiements to the fullest extent, unfortunately it failed to produce a sure way to make profit. I also tried MACD and SMA, which only need a home computer to test, but also failed.

In short, here are the failed lessons learned:

- Intra-day stock price fluctuations do not necessarily repeat itself and past probability would not carry over to the future

- For certain continuous winning days, that could be just being lucky

- Frequent trading means large commissions paid to the broker, making them richer

- Frequent cutting losses making the overall losses bigger and bigger

Now, the good lessons learned:

- Go is much much faster than Python

- The Cloud liberates individuals to innovate and test quickly without breaking their bank account

- Fully unsupervised and automated day-trading with Interactive Brokers could be run stably in a burstable Windows or Linux EC2 with only 4GB of RAM

- It is likely cheapter to run the fully automated trading program in the Cloud than at home (as at home one would need to buy UPS, an always on computer, 2 lines of Internet connections from different ISPs and a multi-WAN router to ensure the Internet connection is always on)